Cars Data Engineering Project

- Jesse Pepple

- Oct 3, 2025

- 5 min read

Updated: Jan 8

This project implemented Unity Catalog in Databricks to provide secure, centralized data governance, while leveraging Delta Lake and Delta Tables for scalable and efficient data storage. The data pipeline followed the Medallion Architecture (Bronze → Silver → Gold), ensuring data quality, reliability, and usability at every stage. The solution was built using Azure Data Factory, Azure SQL Database, Azure Data Lake Storage, and Azure Databricks, with Python and SQL as the primary languages for data transformation and scripting.

Azure Services:

Azure Databricks

Azure Data Factory (ADF)

Azure Data Lake Storage (ADLS Gen2)

Azure SQL Database

Azure Synapse Analytics

Databricks Components:

Delta Lake & Delta Tables

Unity Catalog (governance)

Databricks SQL Warehouse

Languages & Tools:

Python (PySpark / notebooks)

SQL

Key Takeaways / Project Impact

Governance: Unity Catalog reinforced the importance of centralized security and access control

Incremental Pipeline Design: Balanced efficiency and scalability for regular updates

Transformation Awareness: Even minimal transformations can impact downstream processes

Dimensional Modeling: Proper creation of dimension and fact tables with surrogate keys ensures query performance and analytical integrity

Portfolio Impact: Demonstrates ability to design and implement end-to-end data pipelines with governance, scalability, and analytical readiness

For the Cars project, I started by provisioning and organizing all necessary resources within a dedicated Azure Resource Group, ensuring a clean and manageable environment. I then initiated the data ingestion process using Azure Data Factory, bringing in raw data directly from GitHub into an Azure SQL Database. This step established a reliable and structured foundation for subsequent transformations, enabling efficient processing, analysis, and eventual delivery of high-quality datasets for downstream use. Mainly the focus was testing out incremental loading using stored procedures for Watermark Tables, this required alot of proper research from various sources.

Initial and Incremental Load

The next stage of the project involved building a robust pipeline to move data from Azure SQL Database into Azure Data Lake Storage (ADLS). A key focus was designing both initial and incremental loading processes to ensure efficient, reliable, and consistent data updates. For incremental loads, I determined the current load with:

SELECT MAX(Date_ID) AS max_date FROM source_cars_data;

and compared it with the previous load stored in the watermark table:

SELECT * FROM water_table;

This approach allowed the pipeline to capture only new or updated records, minimizing unnecessary processing and maintaining the integrity of the dataset in the lake.

Goal: Efficiently ingest raw data from GitHub into Azure SQL Database.

KPIs & Metrics:

Source Integration: Connected GitHub as the primary raw data source

Initial Load: Successfully ingested full dataset into SQL Database

Incremental Load: Implemented stored procedures to manage incremental updates

Data Validation: Initial ingestion validated with no missing or corrupted rows

Result Statement:

Built a reliable pipeline for raw data ingestion, supporting both initial and incremental loads for scalable updates.

Phase 2 Transformations

Following the data ingestion into ADLS, I implemented Unity Catalog to ensure secure, centralized governance across the datasets. Next, I set up the Databricks environment to handle the core transformation tasks. The required transformations were minimal but strategic. The key adjustment involved splitting the Model_Id field into a new Model Category, which not only improved the dataset’s structure but also laid the groundwork for managing Slowly Changing Dimensions (SCD Type 1) and preparing the data for further refinement in downstream processes.

Leveraging Unity Catalog made accessing the data lake straightforward and efficient. All I needed to do was create a schema and set up external locations linked to the corresponding container in our data lake. This setup provided a secure and organized structure for the transformed data, simplifying both access and management while ensuring governance best practices were maintained.

Bronze → Silver Layer Transformation

Goal: Clean and prepare data for analytical use.

KPIs & Metrics:

Minimal Transformations: Split Model_ID into Model Category for SCD preparation

SCD Type 1 Readiness: Prepared dimension keys and surrogate keys for downstream processing

Databricks Environment: Configured notebooks and parameterized pipelines for transformations

Result Statement:

Successfully transformed raw data into an organized Silver layer, ready for enrichment and analytical modeling.

Phase 3 Serving

In Phase 3, the main focus was implementing the Slowly Changing Dimensions (SCD) process. This involved cleaning the data and creating the necessary keys to build the final tables. I constructed the dimension model and generated surrogate keys for entities such as DealerName/DealerID, ModelID/ModelCategory, Date_ID, and BranchID/BranchName. These dimensions were then joined in the Fact Sales table to finalize the Star Schema, optimizing the data for analytics and reporting. Once the schema was complete, I planned and configured the pipeline to handle both the initial load (stored in the Databricks warehouse) and incremental loads, ensuring the data pipeline could refresh efficiently as new records arrived.

Final Pipeline

I ran the initial load

To further validate the pipeline, I introduced changes to the source data and re-ran the process. The updates were handled seamlessly, confirming that the Slowly Changing Dimensions (SCD Type 1) logic functioned as intended. This final step demonstrated the robustness and reliability of the design, concluding the project with a scalable and efficient data pipeline ready for ongoing analytics and reporting.

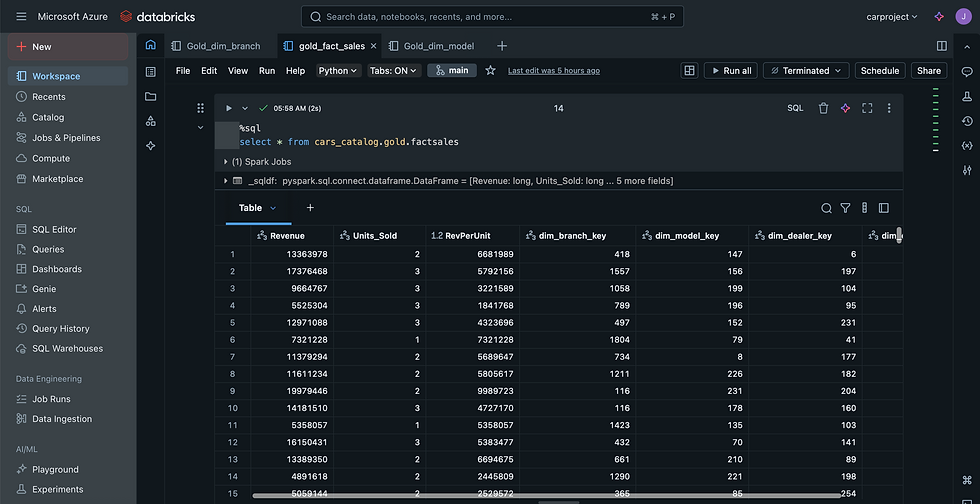

To validate the final output, I tested the data by running SQL queries in the Databricks SQL Warehouse, simulating the type of exploration a data analyst would perform. This confirmed that the data was well-modeled, accurate, and ready for analysis, demonstrating that the pipeline successfully delivered business-ready, high-quality datasets. With this final step, the project concluded, showcasing a robust, scalable, and reliable data engineering workflow.

Gold Layer & Star Schema

Goal: Implement a robust dimensional model optimized for analytics.

KPIs & Metrics:

Dimension Tables: Generated surrogate keys for Dealer, Model, Date, and Branch

Fact Table: Integrated dimensions into Fact Sales table

SCD Type 1: Validated that incremental updates correctly updated dimensions and fact tables

Result Statement:

Created a fully functional Star Schema, supporting both initial and incremental data loads, ensuring accurate and analyst-ready data.

Github Link:

If interested in the codes and notebooks of my project below is my link

Data Serving & Validation

Goal: Make curated data available for analysis and reporting.

KPIs & Metrics:

Databricks SQL Warehouse: Validated tables through queries and exploration

Incremental Updates: Confirmed new changes handled correctly by SCD Type 1 logic

Pipeline Robustness: Demonstrated reliable end-to-end execution

Result Statement:

Delivered accurate, curated, and business-ready datasets, enabling analysts to explore and report on data efficiently.

Lessons Learned

Working through this project provided valuable insights into the end-to-end data engineering lifecycle. Some key takeaways include:

Importance of Governance – Leveraging Unity Catalog reinforced the value of centralized governance and security when handling multiple data sources and collaborating across teams.

Incremental vs. Initial Loads – Designing for both scenarios highlighted the need for efficient and scalable pipelines that can refresh regularly without compromising data integrity.

Transformation Complexity – Even seemingly simple transformations in the Silver layer can have downstream impacts, especially when preparing surrogate keys for Slowly Changing Dimensions (SCDs).

Data Modeling Best Practices – Building the Star Schema emphasized the importance of carefully structuring dimension and fact tables to ensure analytical queries run effectively and reliably.

Overall, the Cars project strengthened my ability to design and implement scalable, secure, and high-performance data pipelines, balancing governance, performance, and usability to deliver business-ready datasets.

Comments